Have you ever heard of large language models (LLMs)? They are one of the hottest topics in the AI field these days. However, recent research suggests that these models can make serious errors even on simple commonsense problems. Let’s explore how this happens and what we can do to solve this issue.

What is a Large Language Model?

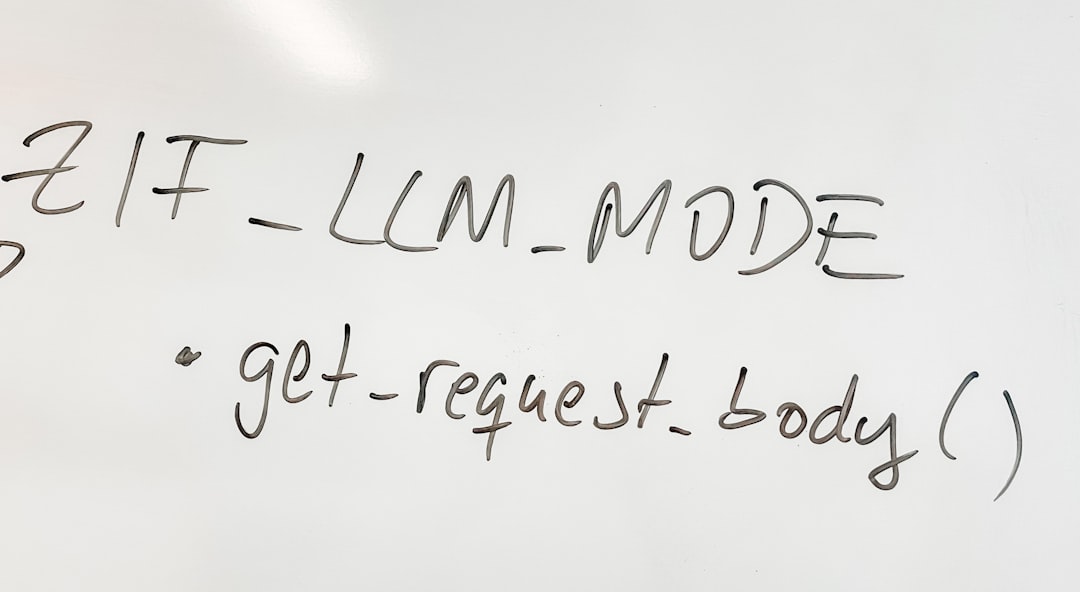

So, what is a large language model, or LLM? In simple terms, it’s an AI model trained on an enormous amount of data. Thanks to this, it performs exceptionally well on a variety of tasks. For example, it’s widely used in chatbots, translators, and writing assistants. LLMs follow the ‘scaling law,’ which means that as their pre-training size increases, their performance improves. @note

The Problem: Errors in Simple Commonsense Tasks

No matter how advanced an LLM is, it’s not perfect. Recent research has shown that even the latest LLMs can make serious errors on simple commonsense tasks. @note For example, they might confidently provide incorrect answers to questions that humans can easily solve and justify those answers with illogical explanations. Curious to see what this means? Let’s take an example.

Imagine a question like, “In which season does it snow?” The model might answer “summer” and explain that “it can snow in summer.” Sounds absurd, right?

Various attempts have been made to address this issue. However, methods like prompt enhancement or multi-step reassessment to guide the model to the correct answer have mostly failed. This shows that current LLMs still have significant limitations.

The Need for Reassessment: Standardized Benchmarks

So, what should we do next? First, we need to properly understand the issues with the current generation of LLMs and create new standardized benchmarks to improve them. These benchmarks will help us better understand the models’ weaknesses and increase their reliability.

The crucial point here is that such flaws can have a significant impact on real-world applications. Especially when making important decisions, the reliability issues of LLMs can pose great risks. @note Therefore, it’s essential to be cautious when using LLMs.

Alternative Technologies and Future Research Directions

Finally, we need to consider other AI technologies or models beyond LLMs. For instance, reinforcement learning or hybrid models can be alternatives. Additionally, there is a need for models that better mimic human commonsense and reasoning abilities.

Conclusion

Now, do you have a better understanding of the issues and solutions regarding LLMs? In this era we live in, AI is significantly transforming our lives. However, there are also many points that require attention and improvement. It seems we all need to think together and strive to create better AI technologies.