RAG (Retrieval-Augmented Generation) is a method that enhances the generation of responses by incorporating retrieved information, resulting in more accurate and reliable answers than using a standard text generation model. If you’re looking to implement RAG, here’s how you can do it using Ollama and LangChain. This article will guide you from the basic concepts to hands-on code examples for implementing RAG with these tools.

1. What is RAG?

RAG stands for Retrieval-Augmented Generation. It refers to a technique in natural language processing (NLP) where information is retrieved and used as a basis to generate natural responses. This approach provides more accurate and reliable answers than simply using a text generation model.

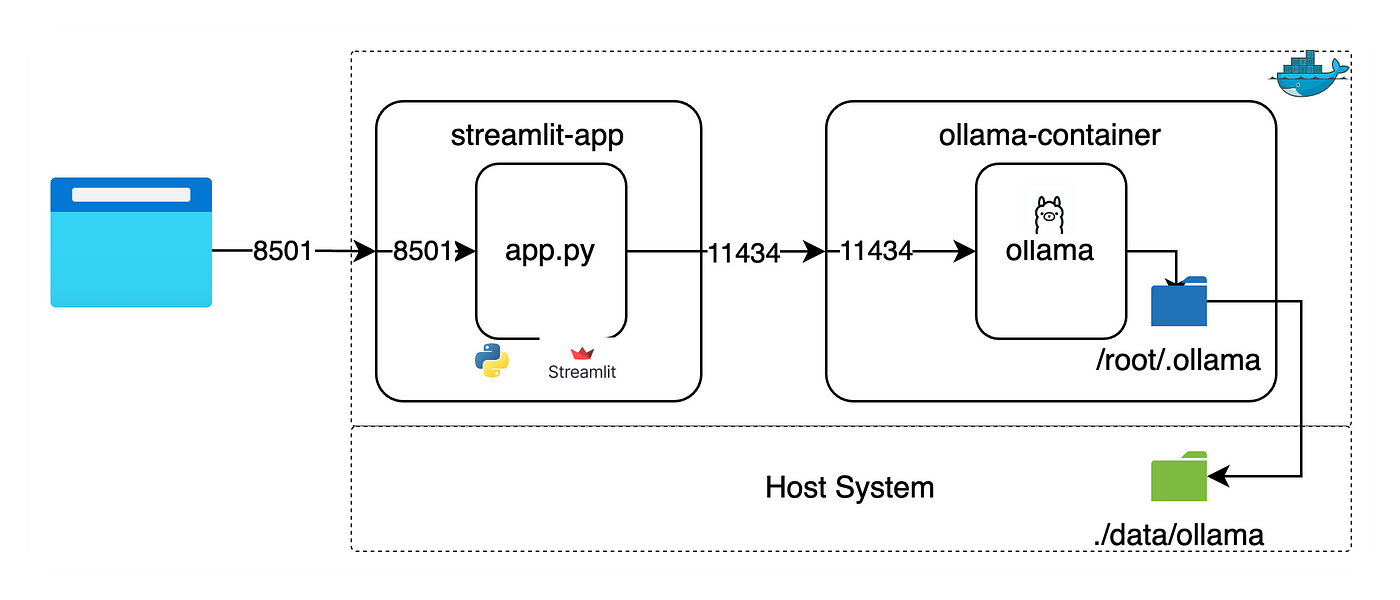

2. Introduction to Ollama and LangChain

- Ollama: A natural language generation model that delivers high performance in various tasks.

- LangChain: A useful tool for constructing and managing NLP pipelines, allowing for easy integration and extension of multiple modules.

3. Steps to Implement RAG using Ollama and LangChain

Here are the steps to implement RAG using Ollama and LangChain.

Step 1: Install Required Modules

pip install ollama

pip install chromadb

pip install langchainInstall the necessary modules.

Step 2: Implement Basic Chat

from langchain_community.llms import Ollama

llm = Ollama(model="llama2")

llm.invoke("Tell me a joke")Load the Ollama model and implement basic chat functionality.

Step 3: Complete Sentences

llm = Ollama(model="mistral")

llm("The first man on the summit of Mount Everest, the highest peak on Earth, was ...")Test the functionality of the Ollama model with a sentence completion example.

Step 4: Generate Real-time Responses

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

llm = Ollama(

model="mistral",

callback_manager=CallbackManager([StreamingStdOutCallbackHandler()])

)

llm("The first man on the summit of Mount Everest, the highest peak on Earth, was ...")Use LangChain’s callback functions to generate real-time responses.

Step 5: Embed and Retrieve Documents

from langchain.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://www.gutenberg.org/files/1727/1727-h/1727-h.htm")

data = loader.load()

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)Embed documents and split them into appropriate sizes.

from langchain.embeddings import OllamaEmbeddings

from langchain.vectorstores import Chroma

oembed = OllamaEmbeddings(base_url="http://localhost:11434", model="mistral")

vectorstore = Chroma.from_documents(documents=all_splits, embedding=oembed)Store the embedded vectors in ChromaDB.

question = "What's the name of main character?"

docs = vectorstore.similarity_search(question)

print(len(docs))

print(docs)Search the stored data to find answers to the question.

Step 6: Generate Responses

from langchain.llms import Ollama

from langchain.chains import RetrievalQA

ollama = Ollama(base_url='http://localhost:11434', model="gemma")

qachain = RetrievalQA.from_chain_type(ollama, retriever=vectorstore.as_retriever())

result = qachain.invoke({"query": question})

print(result)Generate responses using LangChain’s RetrievalQA based on the retrieved data.

4. Practical Example

Here’s an example of handling image-based questions.

from PIL import Image

import base64

from io import BytesIO

from IPython.display import HTML, display

def convert_to_base64(pil_image):

buffered = BytesIO()

pil_image.save(buffered, format="JPEG")

img_str = base64.b64encode(buffered.getvalue()).decode("utf-8")

return img_str

def plt_img_base64(img_base64):

image_html = f'<img src="data:image/jpeg;base64,{img_base64}" />'

display(HTML(image_html))

file_path = "pets_sample_img.jpg"

pil_image = Image.open(file_path)

image_b64 = convert_to_base64(pil_image)

plt_img_base64(image_b64)

llm_with_image_context = llava.bind(images=[image_b64])

llm_with_image_context.invoke("How many pets are in the image?")Check the model’s versatility by answering questions based on an image.

Conclusion

Implementing RAG using Ollama and LangChain is a powerful approach to effectively retrieve information and generate natural responses based on that information. By following this guide, you will understand the basic concepts of RAG implementation and practice these methods. This will enable you to create better response generation models.