Converting HTML to Markdown is not as simple as it seems. Web content often has a complex structure and contains noise, making it challenging to cleanly extract and convert the main content. To solve this issue, Jina AI has released Reader-LM, a Small Language Model (SLM) optimized for converting HTML into Markdown by selectively copying key content from the HTML and supporting long contexts.

Background of Reader-LM

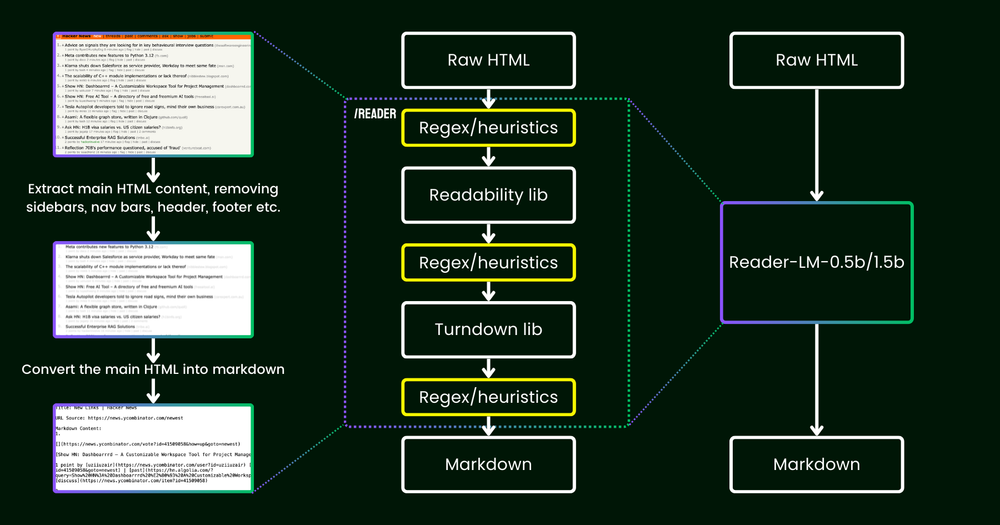

In April 2024, Jina AI introduced Jina Reader, an API designed to convert webpage URLs into Markdown. The API worked by fetching webpage sources via Chrome, extracting main content using the Readability package, and then converting HTML into Markdown with regex and the Turndown library.

However, the existing method had accuracy issues. The Readability filter sometimes failed to remove incorrect content, and Turndown struggled to convert certain HTML tags into Markdown. To address these problems, Jina AI started developing its own small language model for converting HTML to Markdown.

Technical Features of Reader-LM

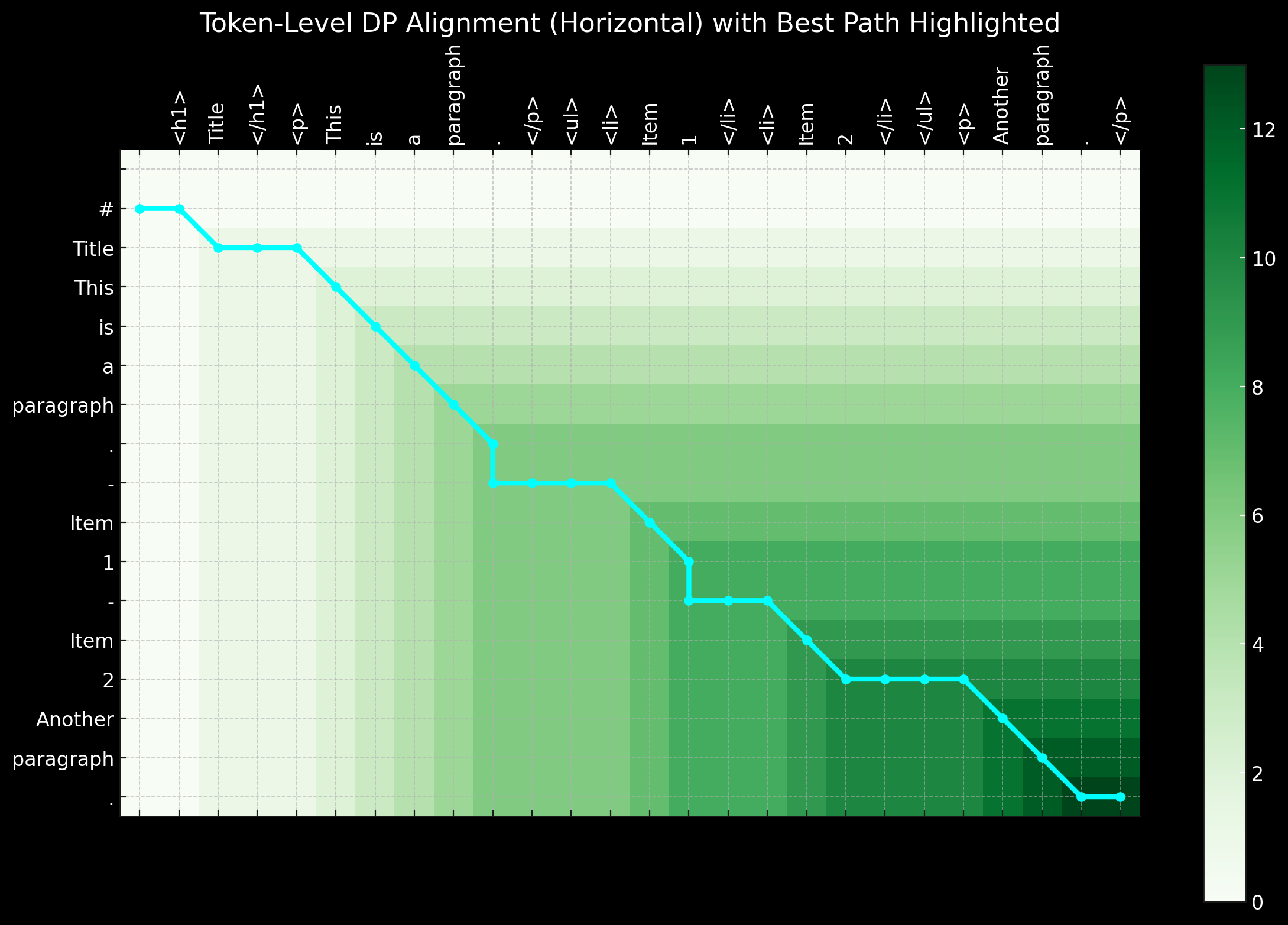

The process of converting HTML to Markdown is less about creativity and more about selectively copying content from input to output. As such, Jina AI opted for a “shallow-but-wide” small language model structure rather than a large one, focusing on reducing the number of transformer blocks needed for the conversion process. Reader-LM is available in two versions.

- reader-lm-0.5b: A model with 494M parameters

- reader-lm-1.5b: A model with 1.54B parameters

The model supports up to 256K tokens to handle long contexts, which is essential for dealing with the complex and lengthy nature of typical webpage sources.

Performance of Reader-LM

Reader-LM has been evaluated across various metrics, showing excellent results in ROUGE-L, Token Error Rate (TER), and Word Error Rate (WER). Notably, the reader-lm-1.5b model achieved a ROUGE-L score of 0.72 and a WER of 1.87, outperforming competing models. In visual inspections of web pages converted to Markdown, reader-lm-1.5b demonstrated superior performance in header extraction, content retention, and Markdown syntax.

Key performance results of Reader-LM:

- ROUGE-L: 0.72

- WER: 1.87

Commercialization and Use Cases

Reader-LM was developed with commercial environments in mind. Jina AI provides a notebook on Google Colab for users to experience reader-lm by converting content from Hacker News into Markdown. In commercial settings, high-performance GPUs such as the RTX 3090/4090 are recommended.

Jina AI plans to offer Reader-LM on Azure Marketplace and AWS SageMaker. The commercial license is CC BY-NC 4.0, with separate inquiries needed for commercial use.

Future Challenges and Improvements

Although Reader-LM already shows excellent performance, there is still room for improvement. Jina AI plans to expand the model’s context length, improve decoding speed, and add command support. Additionally, due to the noisy and complex structure of HTML content, new methods for more efficient processing are being researched.

Conclusion

Reader-LM stands out as a compact language model that excels at web data extraction and Markdown conversion. Despite using fewer resources than large LLMs, it can handle long contexts, making it a practical solution in many web data-related fields. The future development of Reader-LM holds great potential, and Jina AI will continue to drive innovation in web data processing. Reader-LM will be the optimal choice for those working with web data.

Reference: Jina AI, “Reader-LM: Small Language Models for Cleaning and Converting HTML to Markdown”